Running Head: AECOPDs in Electronic Health Records: A review

Funding support: Infrastructure support for this research was provided by the National Institute for Health and Care Research’s Imperial Biomedical Research Centre.

Date of Acceptance: January 7, 2025 │ Publication Online Date: February 5, 2025

Abbreviations: AECOPD=acute exacerbation of COPD; CI=confidence interval; COPD=chronic obstructive pulmonary disease; CPRD=Clinical Practice Research Datalink; DPC=Diagnosis Procedure Combination; eCRF=electronic case report form; EHR=electronic health record; FCE=functional capacity evaluation; GOLD=Global initiative for chronic Obstructive Lung Disease; GP=general practitioner; HES=Hospital Episode Statistics; ICD-9-CM=International Classification of Diseases, Ninth Revision, Clinical Modification; ICD-10=International Classification of Diseases, Tenth Revision; LRTI=lower respiratory tract infection; NPV=negative predictive value; OCS=oral corticosteroid; PPV=positive predictive value; SNOMED CT=Systematized Nomenclature of Medicine Clinical Terms

Citation: Moore E, Stone P, Alizadeh A et al. Validation of acute exacerbation of chronic obstructive pulmonary disease recording in electronic health records: a systematic review. Chronic Obstr Pulm Dis. 2025; 12(2): 190-202. doi: http://doi.org/10.15326/jcopdf.2024.0577

Online Supplemental Material: Read Online Supplemental Material (327KB)

Introduction

Chronic obstructive pulmonary disease (COPD) is a disease that is characterized by persistent respiratory symptoms including breathlessness, sputum or cough, and airflow limitation due to damage to the airway and/or alveoli.1,2 COPD is most commonly caused by cigarette smoke, but pollution and occupational exposures are also risk factors for COPD.1,2 Patients with COPD can experience episodes of sustained worsening in their symptoms, referred to as an acute exacerbation of COPD (AECOPD), and can be severe enough to require hospitalization.3 Frequent exacerbations are associated with increased mortality4 and a decrease in lung function,5 exercise capacity,6 and quality of life,7 and each additional AECOPD increases the risk of a subsequent AECOPD and death.8 Additionally, hospitalizations for AECOPDs are very costly and can increase the economic burden on health care services.9-13 In England, the average cost per admission for an AECOPD is estimated to be £1,868,14 and in the United States for the most severe admissions11 reportedly as high as an average of $44,909.

Due to the impact of AECOPD admissions on both patients and health care services, there is an impetus15 to complete research on AECOPDs to discover potential interventions to reduce their frequency. Electronic health records (EHRs) provide a relatively quick and inexpensive16 source of data to be able to carry out such studies and are increasingly being utilized in research.17 Diagnoses are recorded in EHRs using a coded clinical terminology set such as International Classification of Diseases (ICD) codes,18 which are widely used in hospital admission discharge summaries and health care billing databases globally, consisting of 7 characters of letters and numbers to classify diagnoses. In the United Kingdom, in primary care, diagnoses are commonly recorded in databases as Read codes (now increasingly obsolete) or as Systematized Nomenclature of Medicine Clinical Terms (SNOMED CT) codes which are coded clinical terms used in general practice primary care databases in the National Health Service.19

However, EHRs are not designed with research in mind – their primary focus being to aid physicians in the management of a patient’s health care,20 or for the purpose of insurance claims.21 For example, the assignment of primary and secondary ICD discharge diagnosis codes for hospitalized patients is often done for reimbursement and, therefore, may be influenced by the anticipated reimbursement for a diagnosis, bringing into question the validity of these data for the identification of patients with a specific condition.22 Furthermore, different databases (and even different clinicians entering records into those databases) use different coding strategies to classify an AECOPD and there is a lack of consensus over which strategies and definitions to use. To ensure studies utilizing EHRs are examining the condition of interest and are not at risk of misclassification, it is important to use validated definitions of the condition of interest.23,24 A validated definition will commonly take the form of a list of codes of a particular clinical terminology, along with an algorithm of how to apply those codes. A validation study will then give estimates on the likelihood of a case detected with the algorithm being a true case.25 Measures of validation include positive predictive value (PPV), negative predictive value (NPV), sensitivity, and specificity.

A previous systematic scoping review by Sivakumaran et al,26 aimed to identify how individuals with COPD are identified within EHRs and found widespread variation in the definitions used to identify people with COPD. Of the 185 eligible studies, only 7 used a case definition which had been validated against a reference standard in the same dataset. They argued that the inconsistencies in methods for identifying people with COPD in EHRs are minimizing the potential for harnessing EHRs worldwide. To our knowledge, there has not been another systematic review examining the identification and validation of AECOPDs in EHRs.

Therefore, in this systematic review we aim to summarize all validated definitions of AECOPD for use in EHRs and administrative claims databases, and in cases where multiple similar definitions are available, provide guidance on the best algorithm to use to ensure an accurate cohort of AECOPD cases is available for researchers using EHRs.

Methods

MEDLINE and Embase (via the Ovid interface) were searched using keywords and Medical Subject Headings terms27,28 related to “exacerbation of COPD,” “electronic health records” or “administrative claims database,” and “validation,” including any relevant synonyms. The full search strategy can be found in Supplementary File 1 in the online supplement. The methodology developed by Benchimol et al,29 along with search strategies from other similar reviews30-34 of validation studies in EHR databases, were used to construct the search strategy for this review. To ensure the literature was comprehensively searched, reference lists from studies that were retrieved were also hand searched.

Study Selection Criteria

All studies validating definitions of AECOPD in EHRs were considered for inclusion in this review. Studies had to be written in English and published between 1946 (MEDLINE) or 1947 (Embase) and May 31, 2024. The specific criteria for inclusion were:

- AECOPD admission data had to come from either an EHR or an administrative claims database that routinely collects health data.

- The detection algorithms for AECOPD had to be compared against a reference standard or gold standard definition (e.g., chart reviews or questionnaires completed by physicians to confirm and validate the diagnosis).

- Finally, a measure of validity had to be available (e.g. sensitivity, specificity, PPV, NPV, and c-statistic, etc.) or there had to be a means to calculate one from data within the study.

During the screening process, it became apparent that adding another criterion for inclusion was necessary: potential wider applicability of the algorithm (i.e., the algorithm could be applied to another dataset). As the aim of this review is to recommend algorithms for future research, it was, therefore, decided that studies should be excluded if they could not be easily applied to other datasets. Studies were also excluded if they only validated a diagnosis of COPD, not specifically an AECOPD.

Data Synthesis

The protocol for data management and synthesis is described by Stone et al.35 Two different reviewers (PS and EM) independently screened the articles selected for full-text review and any disagreement between the reviewers was resolved by consensus or third reviewer (JKQ) arbitration. If studies were excluded the reasons were recorded, and the 2 reviewers extracted study details and assessed risk of bias for the included studies independently. Data were extracted into Microsoft Excel (Microsoft Corporation; Redmond, Washington) and included:

- Details of the study (including title, first author name, year of publication, doi)

- The aims of the study or research question

- Details of the EHR database

- A description of the studied population (specific groups, location, and time period)

- A description of the AECOPD detection algorithm(s (e.g., the list of clinical codes used)

- Details of the reference standard or gold standard that the algorithm(s) were compared against

- The measure(s) of validity that were used (e.g., PPV, NPV, etc.) along with validity results

- The prevalence of AECOPD if available

The validity of the AECOPD detection algorithm was the primary outcome measure in this review.

The risk of bias in individual studies was assessed using a quality assessment tool36 for diagnostic accuracy studies known as the QUADAS-2. The QUADAS-2 was specifically adapted to this review using the reporting checklist developed by Benchimol et al29 for use in validation studies of health administrative data. A copy of the adapted QUADAS-2 risk of bias assessment used in this study can be found in Supplementary File 2 in the online supplement.

The registered protocol can be found on the International Prospective Register of Systematic Reviews (PROSPERO) (registration number: CRD42019130863) and has been published elsewhere.37

Results

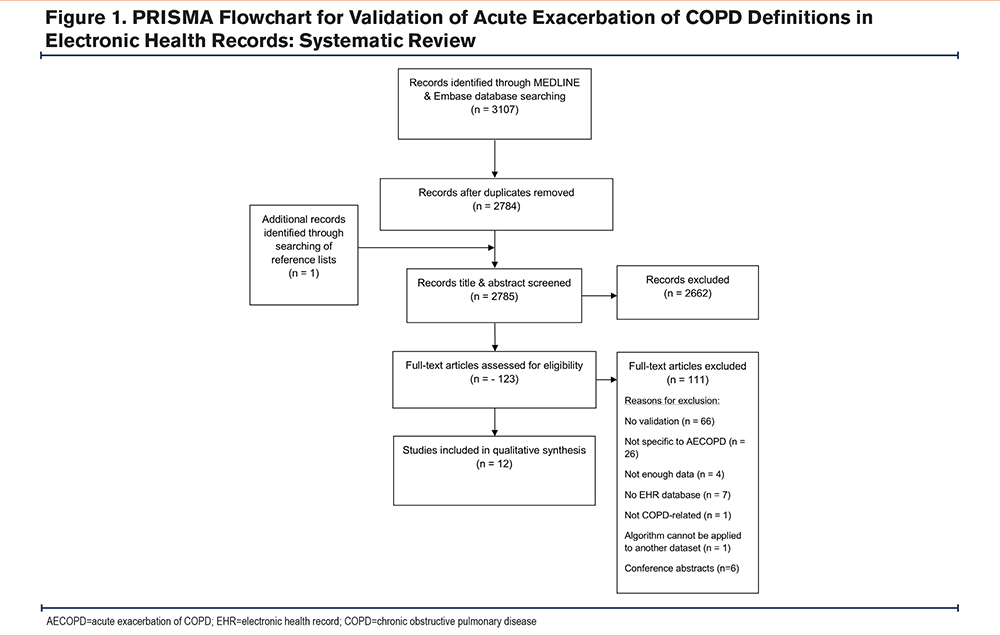

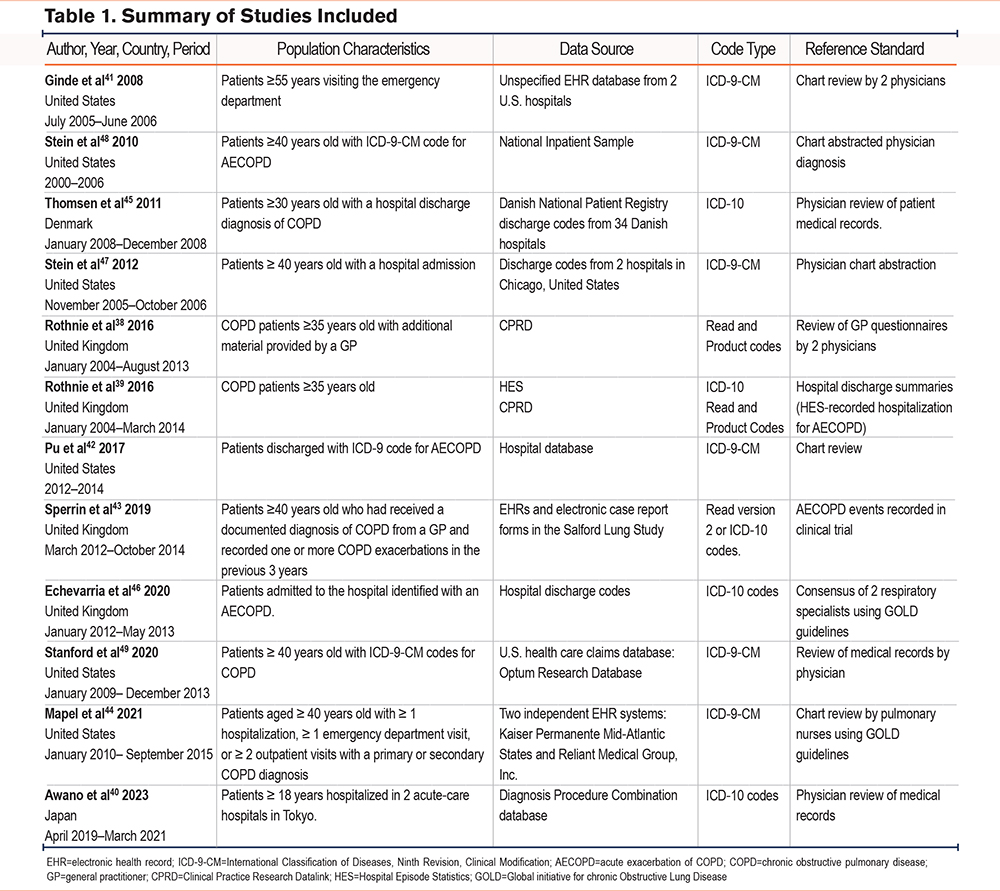

From the 2784 articles found by the search strategy, 12 studies38-49 were eligible for inclusion and were included in the review (Figure 1). Six of the studies were in databases from the United States, 4 were from English national patient databases, one was from a Japanese database and one came from the Danish National Patient Registry (summarized in Table 1). Full details of each study can be found in Supplementary File 3 in the online supplement). The clinical terminology used to retrieve data on admissions was either ICD-9-Clinical Modifications (CM) (6 studies), ICD-10 (5 studies), or Read codes (2 studies by Rothnie et al38,39). The ages of patients varied between studies with one study using a broad definition of patients aged ≥18 years.40 whereas another study was more selective, including patients ≥55 years old.41 There was one conference abstract by Pu et al,42 that was included and it should be noted that it has not been through peer review, however, sufficient detail was included in the abstract to allow for assessment in this review. For the reference standard, 9 studies used chart review or consensus by physicians and nurses. One study by Rothnie et al used a review of general practitioner (GP) questionnaires38 and a subsequent study by Rothnie et al39 utilized hospital discharge summaries. Finally, for their reference standard, Sperrin et al43 compared the index test with AECOPD events recorded in clinical trial data.

Risk of Bias Assessment

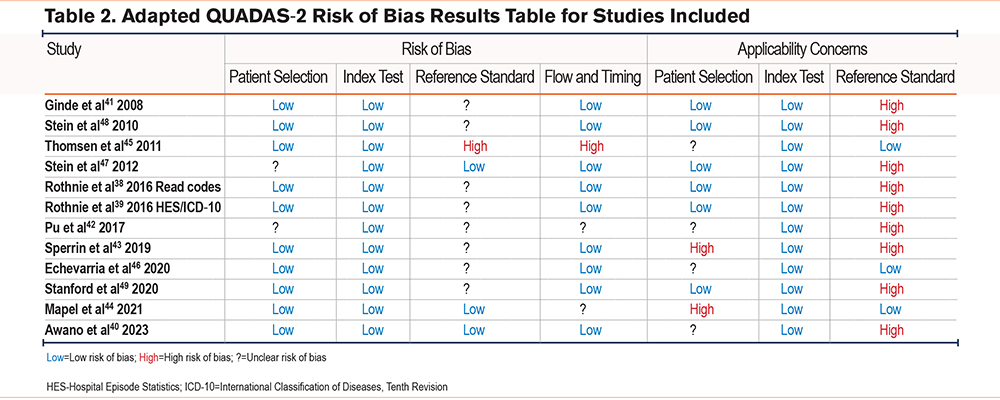

The risk of bias for each study is shown in Table 2. None of the studies had a low risk of bias for all domains assessed. The reference standard was the domain in which studies struggled to score a low risk of bias. Only 2 out of the 12 studies scored a low risk of bias for the reference standard (Mapel et al44 and Awano et al40 ) and only 3 studies had a low risk of bias under applicability concerns because they used spirometry in the reference standard to confirm a diagnosis of COPD (Thomsen et al,45 Echevarria et al,46 and Mapel et al44). The reference standard used by Thomsen et al45 scored high risk of bias because physicians reviewing the charts were not blinded to the diagnosis codes of the index test and, therefore, this could have influenced the interpretation and classification of the reference standard. This study was also at high risk of bias for flow and timing as it was unclear if all patients were included in the analysis as the busy hospitals (that may have had more severe cases) were unable to return all the details from the patient record. One other study (Sperrin et al43) also had an unclear risk of bias for the reference standard as it was unclear if the results were interpreted without knowledge of the index test. In the Stein et al (2012) study,47 patients who were transferred from another hospital were excluded and, therefore, this study scored unclear risk of bias for patient selection. The patients who were excluded may also have been more severe cases. The Rothnie et al study38 that validated primary care Read code definitions had a high risk of applicability concerns because they compared the results of Read code definitions against Hospital Episode Statistics (HES) ICD-10 code definitions (as the reference standard) and the results were not validated by physicians (the gold standard). Finally, 2 studies scored a high risk of bias for patient selection (Sperrin et al43 and Mapel et al44 ) because they used more than one database from which they selected patients and this may have introduced bias as patients were not from one specific setting.

Summary of Results for Studies Validating Use of International Classification of Diseases-Ninth Revision Codes

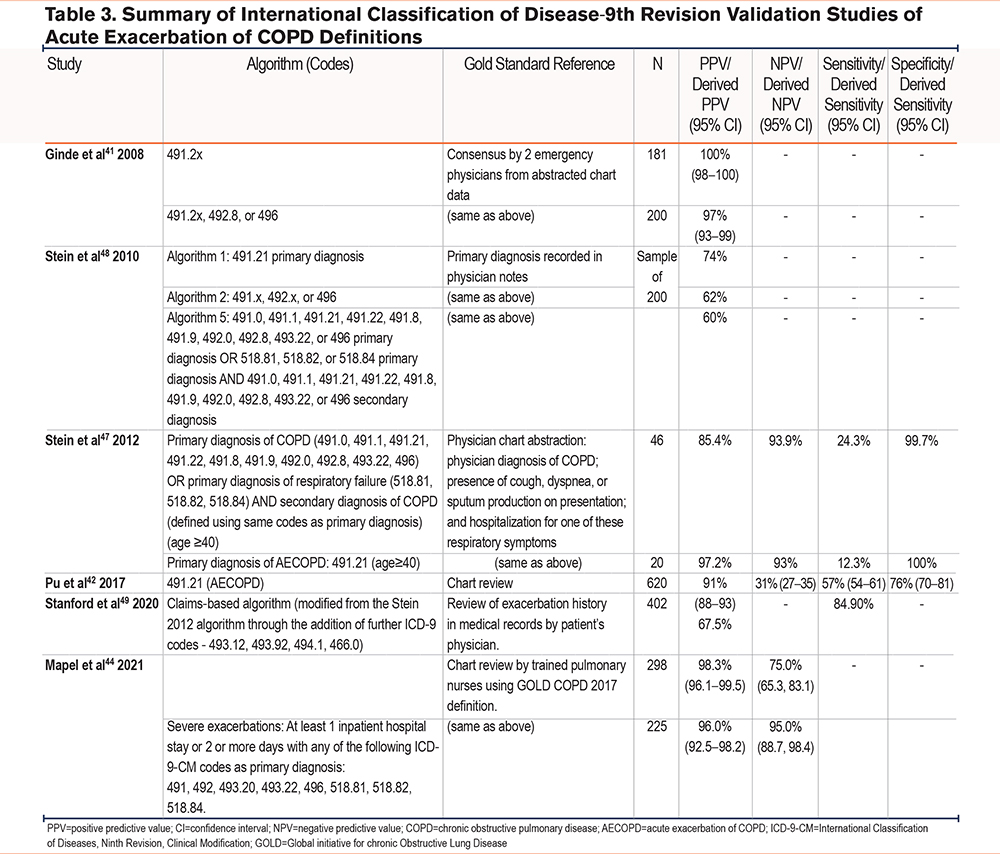

Studies validating ICD-9-CM codes (Table 3) were all carried out in the United States. All studies validated similar ICD-9-CM codes, and the single AECOPD code of 491.2x provided the best PPV in all studies, ranging between 60% and 100%. Ginde et al41 demonstrated high PPV (97%) for the detection of AECOPDs using 3 ICD-9-CM codes. However, results from Stein et al48 (2010) reported lower PPV values, and these varied depending on which algorithm was used (74% for algorithm 1, 62% for algorithm 2, and 60% for algorithm 5), suggesting that the algorithms they used for identifying AECOPDs may identify a substantial number of patients admitted for alternative conditions. A subsequent study by Stein et al47 in 2012 evaluated the 491.21 ICD-9-CM code in a comparison with other algorithms but found that sensitivity was reduced when using codes for a primary diagnosis of COPD (12.3%) or a secondary diagnosis of COPD with a primary diagnosis of respiratory failure (24.3%). Their results implied that ICD-9-CM codes may undercount hospitalizations for AECOPDs and it is questionable whether researchers should rely on ICD-9-CM codes alone to identify AECOPD admissions. Pu et al42 also validated the use of ICD-9-CM code 491.21 and found that using codes such as this could miss a significant proportion of patients with AECOPDs. In a more recent study, Stanford et al49 modified the algorithm by Stein et al47 in 2012 through the addition of further ICD-9-CM codes (493.12, 493.92, 494.1, 466.0) in order to identify exacerbation-related hospital visits and included events for which diagnosis codes may have been a primary or secondary diagnosis. The final algorithm in this study had a high sensitivity of 84.9% and PPV of 67.5%. Finally, a study in 2021 by Mapel et al44 developed 2 algorithms to identify moderate and severe COPD exacerbations. They used a broader algorithm using 18 different ICD-9-CM codes and required steroid or antibiotic prescriptions to identify moderate exacerbations. For severe exacerbations, the records required an inpatient hospital stay of 2 or more days plus one of 8 different ICD-9-CM codes. For both moderate and severe exacerbations, the PPV was high (98.3% and 96.0% respectively).

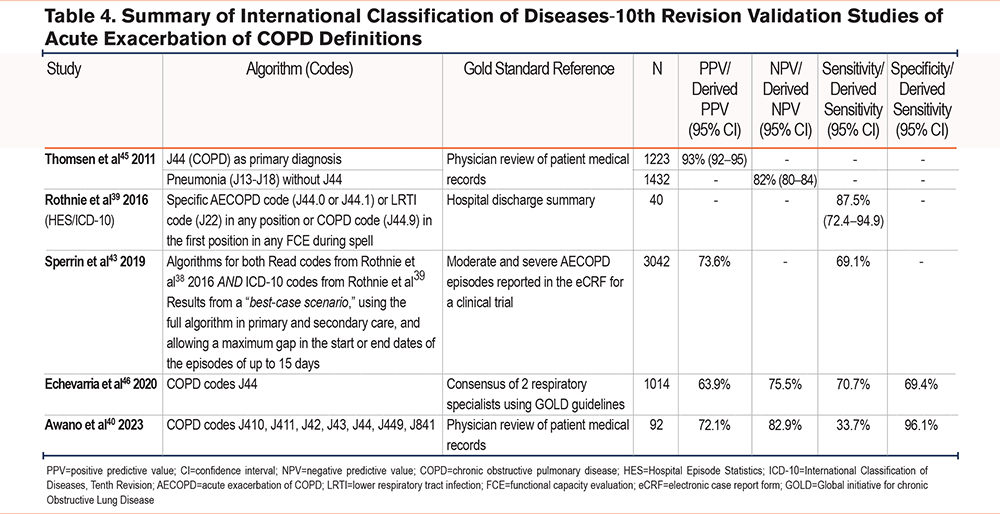

Summary of Results for Studies Validating International Classification of Diseases, Tenth Revision Codes

Of the studies using ICD-10 codes to identify AECOPDs (Table 4), 3 were carried out in the United Kingdom39,43,46 and one was carried out in Japan.40 All studies validated variations of J44 COPD codes, except for Awano et al40 who validated a broader collection of ICD-10 codes (J410, J411, J42, J43, J44, J449, J841). Specificity and NPV were high in this study (96.1% and 82.9% respectively), however, sensitivity was low (33.7%). In the Danish study,45 J44 was used as a parent code for primary AECOPD diagnosis resulting in the best PPV (93%), and when testing all 3 algorithms good PPVs were found. In the United Kingdom, in Rothnie et al,39 the highest sensitivity (87.5%) was found using a COPD code (J44.9) as the primary diagnosis or using codes for AECOPD (J44.0 or J44.1) or lower respiratory tract infections (LRTI) (J22) as either primary or secondary diagnosis codes. The high sensitivity found with this algorithm by Rothnie et al39 may represent a good compromise between high sensitivity and high PPV because it is similar to the algorithm by Thomsen et al45 which gave a high PPV. In a more recent U.K. study, Sperrin et al43 used algorithms for Read codes and ICD-10 codes from both Rothnie et al studies.38,39 Results were populated from a best-case scenario, using the full algorithm in primary and secondary care, and allowing a maximum gap in the start or end dates of the episodes of up to 15 days. This gave a PPV of 73.6% and a sensitivity of 69.1%. Finally, Echevarria et al,46 also in the United Kingdom using J44 ICD-10 codes alone, reported a PPV of 63.9%, an NPV of 75.5%, a sensitivity of 70.7%, and specificity of 69.4%.

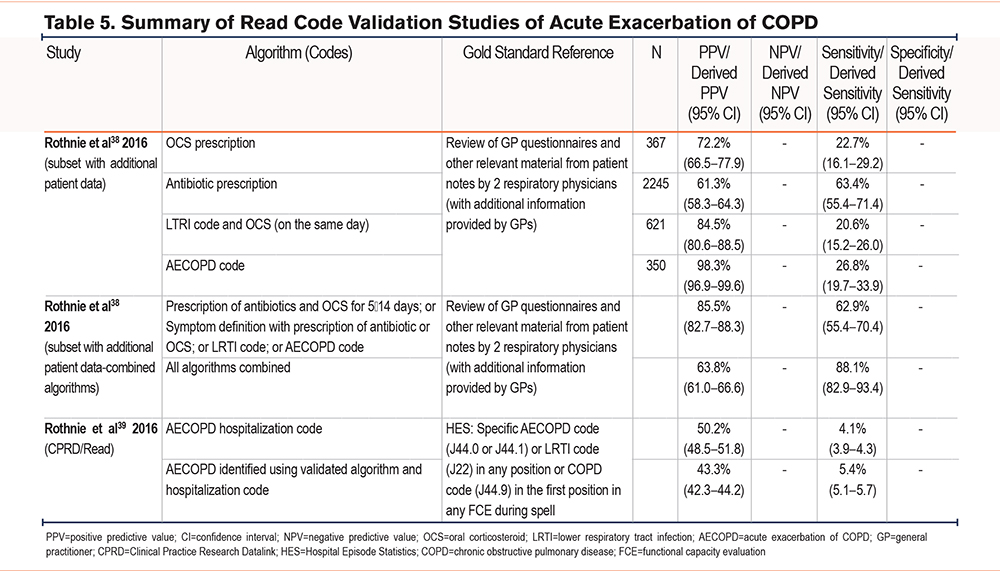

Summary of Results for Studies Validating Read Codes

Two studies38,39 validating the use of Read codes were done in the United Kingdom by Rothnie et al in 2016 (Table 5). The first Rothnie et al study validated the use of Read codes in English primary care against a reference standard of GP questionnaires.38 PPV and sensitivity were used to validate the algorithms and the best compromise was found between the 2 measures when combining their algorithms with a PPV >75%. Using the same definitions as the first, the second study validated the algorithms against HES ICD-10 codes.39 The combination in the algorithm included antibiotic and oral corticosteroid prescriptions for 5–14 days, a symptom (such as dyspnea, cough, or sputum) in addition to the prescription of antibiotics or oral corticosteroids, an LRTI, or an AECOPD code, and produced a PPV of 85.5% and sensitivity of 62.9%.

A quantitative synthesis was unfortunately not possible because of the limited number of studies in which the same clinical terminology was used, and a lack of data on true and false positives and negatives.

Discussion

This systematic review assessed different methods for validating the recording of acute exacerbations of COPD in EHRs and found that a variety of definitions were used. Studies used ICD-9-CM codes, ICD-10 codes, and different combinations of clinical codes in both primary care (using Read codes) and secondary care settings.

Results from studies validating ICD-9-CM codes suggest that ICD-9-CM codes alone may not accurately identify all patients with AECOPD. The validation measurements varied considerably depending on which codes or algorithms were used. The code 491.21 is used to classify obstructive chronic bronchitis with acute exacerbation and this code had a high PPV of 100% in one study41 but had low sensitivity in other studies42,47 suggesting that ICD-9-CM codes alone may underestimate the burden of hospitalizations for COPD. One study49 modified their algorithms through the addition of further ICD-9-CM codes, for example using those to denote asthma with acute exacerbation, bronchiectasis, and acute bronchitis. Although this improved the sensitivity of the algorithm (84.9%), the ability to detect true positives was not as high (PPV 67.5%). However, using multiple ICD-9-CM codes alongside additional information on treatment from care records such as the prescription of steroids or antibiotics, gave high PPVs for moderate (98.3%) and severe (96.0%) AECOPD in another study.44

Our review also found that, as with ICD-9-CM codes, using ICD-10 codes alone in the algorithms may not effectively identify admissions for AECOPD in EHRs. In the United Kingdom, Echevarria et al46 found that using ICD-10 codes alone missed almost a third of patients admitted with AECOPD in their study. By contrast, the Danish study45 found that using a J44 parent code as primary diagnosis gave a high PPV (93%). However, in this study, the reviewers were not blinded to the diagnosis codes and, therefore, knowledge of this could have influenced the results of the physicians’ assessment. The recent study in the Japanese database Diagnosis Procedure Combination (DPC)40 combined multiple ICD-10 codes in addition to J44, including those for bronchitis (J40, J411, J42), emphysema (J43), and acute interstitial pneumonitis (J841). Although the specificity and NPV were high (96% and 83% respectively), sensitivity was low (34%). The authors presumed that diagnoses for chronic diseases such as COPD had not been recorded in the DPC database as specific tests or treatments were not required during hospitalization. This suggests the use of other clinical data in addition to ICD-10 codes would improve the identification of hospitalizations for AECOPD. Rothnie et al completed 2 studies38,39 in 2016 validating the recording of AECOPD cases within U.K. health records. In the first study,38 the data collected was purely from primary health care via the Clinical Practice Research Datalink (CPRD) database using Read codes and product codes. It was suggested that using multiple codes increased the validity, in this case, AECOPD, LRTI codes, antibiotics, and oral corticosteroid codes were utilized. This combination of codes led to a PPV of 85.5% but a lower sensitivity of 62.9%, suggesting that although the strategy was valid it would underestimate the number of events. The second study39 then aimed to identify hospitalizations for AECOPD in CPRD using secondary care data linked to HES and found a sensitivity of 87.5%. However, when using a code suggesting hospitalization for an AECOPD in primary care data alone without HES linkage, a much lower PPV of 50.2% and a sensitivity of 4.1% were found. This implies that primary care data alone does not accurately identify hospitalizations for AECOPD, and researchers should use primary care data that are linked to data from secondary care.

As the screening process was undertaken, it became clear that one study by Shah et al50 stood out for using very different algorithms from the other studies. For the index test, they compared 6 models with different combinations of clinical and administrative data detailing care steps for patients admitted to the hospital including COPD “power plans,” bronchodilator protocol use, billing diagnosis, and treatments administered such as steroid use and oxygen management. Unlike other studies in which ICD-10 codes were used as the index test, this study used the final billing ICD-10 diagnosis for AECOPD as the reference standard for comparing model performance. Since the aim of this review is to provide guidance on which algorithms provide the most accurate cohort of AECOPDs, it became apparent that researchers should be able to apply the recommended algorithms to other datasets, and, therefore, our additional exclusion criteria ruled out this study from our review.

None of the studies had a low risk of bias for all domains assessed meaning that the validity of all the studies may be overestimated. Most studies scored a high risk of bias for the applicability of the reference standard because they did not use spirometry to confirm the COPD diagnosis. Spirometry is a key component of a COPD diagnosis and, therefore, not including it to confirm COPD in the reference standard could increase the risk of bias. Stein et al47(2012) explained the reason why they deliberately did not confirm COPD diagnosis in their reference standard with spirometry as "it would have led to a narrowly selected (and potentially biased) sample with which to evaluate the validity of ICD-9-CM algorithms." In this case, the authors were aiming for sensitivity over specificity. However, their definition should still be considered at risk of bias because it makes it more likely that the reference standard could include non-COPD cases.

A similar systematic review was conducted looking at the validation of codes for asthma within EHRs.30 They conducted a search and found 13 studies that fit their inclusion criteria, particularly choosing to focus on the databases and codes used, along with any sensitivity or specificity measures. As in our review in which the validity of definitions of AECOPD varied across different databases and settings, they found that case definitions and methods of asthma diagnosis validation also varied widely across different EHR databases. The authors suggested that the source of the EHR databases (primary care, secondary care, and urgent care) could influence the case definition of asthma and the way the validation is conducted. For example, patients seeking care for asthma symptoms might present differently in each setting, and the test measures, therefore, might reflect this.

In this study, we have found that using single codes to search for case definitions of AECOPD in EHRs may not effectively identify admissions for AECOPD. Some of the research has shown that modifying algorithms with additional codes may improve sensitivity but at the expense of accurately identifying true positives. This review and others26,30 have shown that different research questions may necessitate different case definitions. For example, if researchers want to prioritize specificity over sensitivity, a more restrictive definition of AECOPD would be used, and vice versa. The Stein et al48 (2010) findings suggested that the selection of an algorithm should depend on its intended purpose. For example, if the intent is to identify patients for quality measurement, an algorithm with the highest PPV would be desirable (e.g., their first algorithm using ICD-9-CM code 491.21). However, if the intent is to estimate the overall burden of disease, then the authors suggested using a more inclusive approach. We propose that a Delphi study would be useful to obtain the consensus of expert clinicians and researchers to decide which algorithms would be recommended in different research scenarios.

There are some strengths and limitations to our study. To our knowledge, this is the first review to systematically review studies that validated definitions of AECOPD in EHRs. We used broad search criteria which meant that we could review a variety of different codes and algorithms used in different databases globally. However, we found that in many studies, the clinical codes utilized were not well reported or were difficult to obtain. Our risk of bias assessment, the adapted QUADAS-2, may have unfairly scored studies that did not use spirometry in the reference standard with a high risk of bias because spirometry was unavailable to confirm the diagnosis of COPD. However, this highlights the importance and need for spirometry data in EHRs. Finally, we were unable to carry out a quantitative analysis because of the limited number of studies included in our review.

COPD and acute exacerbations are underdiagnosed in the general population51 and this is related to the underuse of spirometry as we found in many of the studies. Furthermore, recordings of AECOPDs in EHRs tend to capture events that lead to health care utilization, such as moderate and severe exacerbations, therefore, limiting the capture of mild exacerbations. These are important points for researchers to consider in the future when devising methods to identify AECOPDs in EHRs, and to find ways of balancing sensitivity versus specificity.

Conclusion

The methods used for validating definitions of AECOPDs in electronic health care vary, with different algorithms and case definitions used in different databases globally and in different settings such as primary and secondary care. Using single codes to identify COPD exacerbations (for example ICD-9-CM code 491.21 or ICD-10 code J44) was found to have a high PPV in some studies but low sensitivity in others. This means that the algorithms used can positively identify cases of AECOPD within datasets but may not accurately identify all cases. At present, there is no clear consensus on which definition provides the highest validity or the most sensitive and specific results when searching EHRs for AECOPD cases. The variation between studies in defining COPD exacerbations restricts the ability of researchers to reliably compare findings and provide robust evidence. Consensus from experts is required to guide researchers on which definitions to use in different research scenarios. Researchers should endeavor to make all their disease definitions easily accessible so that others can validate and replicate them.

Acknowledgments

Author contributions: JKQ, EM, and PS were responsible for the conception and design of the work. All authors were responsible for the acquisition of data, data analysis, and interpretation. EM and PS were equally responsible for writing the manuscript. In addition, all authors have read and approved of the manuscript.

The authors would like to thank Dr. Nikhil Sood for his research contribution towards this review.

Declaration of Interests

EM, PS, AA, JS, SD, and SA have nothing to declare. JKQ has been supported by institutional research grants from the Medical Research Council, the National Institute for Health and Care Research, Health Data Research, GSK, Boehringer Ingelheim, AstraZeneca, Insmed, and Sanofi and received personal fees for advisory board participation, and consultancy or speaking fees from GSK, Chiesi, and AstraZeneca.